In lab and small-scale environments, it’s common to rely almost entirely on VM-level backups. That’s exactly how my lab is built: a single virtual machine runs several services I depend on regularly, and it’s protected by a daily, CBT-enabled backup job. These backups are crash-consistent, efficient, and most of the time perfectly adequate.

But as soon as a VM hosts applications with persistent state, especially database-backed services like phpIPAM, the limitations of crash-consistent backups become more relevant. Recently, I decided to address that gap by adding a lightweight, application-aware database backup triggered via a VMware snapshot freeze script. This post explains why that extra step matters and outlines the approach.

Crash-Consistent Backups: A Solid Foundation

Crash-consistent VM backups capture the contents of a VM’s disks at a point in time, similar to an unexpected power loss followed by disk imaging. With modern platforms, this generally works well:

- Journaled filesystems recover cleanly

- Databases include crash recovery logic

- CBT-based backups are fast and reliable

For stateless workloads or even lightly used applications restoring a crash-consistent VM often results in a usable system with minimal effort. The problem isn’t that crash-consistent backups are bad; it’s that they don’t guarantee application consistency.

Where Things Break Down: Application Consistency

Databases are resilient, but resilience doesn’t equal certainty. A crash-consistent restore may leave you with:

- Rolled-back or partially committed transactions

- Extended database recovery times

- In edge cases, unrecoverable corruption

In a lab, that risk might seem acceptable… until the data becomes something you actually care about. In my case, phpIPAM stores IP allocations and metadata that would be tedious and error prone to reconstruct manually.

VM-level backups protect the system; application-level backups protect the data.

Why Add Database Backups When You Already Back Up the VM?

Adding a database dump alongside VM backups provides several practical advantages:

- Known-good consistency: A successful dump proves the data was logically consistent at backup time

- Faster recovery: Restoring a database is often quicker than restoring an entire VM

- Targeted restores: Recover application data without rolling back the OS

- Portability: Restore the data to a new VM or environment if needed

Even if you never use the database backup directly, it significantly reduces uncertainty during recovery.

Coordinating Database Backups with VM Snapshots

The challenge is timing. Ideally, you want the database backup to complete before the VM is backed up, so that the backup contains a very recent application aware backup.

If I used a cron job inside the guest OS that ran at 12:30AM, then a backup that ran at 1:00AM, I’d most likely get a recent backup… however if I decided to change the time of the backup job for some reason, I may forget to adjust the corresponding cron job.

Some backup products support running custom scripts which could trigger this backup, but in my testing required guest OS credentials which would need to be managed.

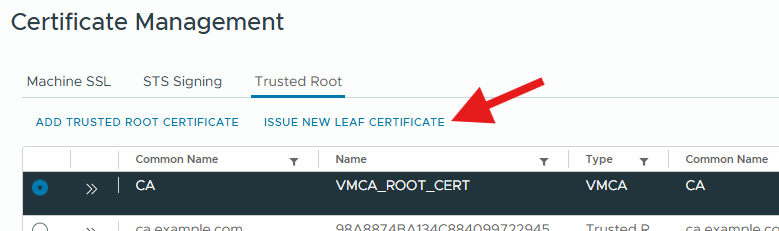

VMware supports running scripts via custom quiescing (freeze/thaw) scripts, which are executed inside the guest OS immediately before and after a snapshot operation. This mechanism is documented here: https://knowledge.broadcom.com/external/article/313544/running-custom-quiescing-scripts-inside.html

By leveraging this capability, the database dump can run automatically during the backup workflow, without requiring changes to the backup product itself. It would also run for snapshots created in the GUI when the ‘Quiesce guest file system(requires VM tools)’ button is checked.

Freeze Script

At a high level, the freeze script:

- Is invoked automatically by VMware Tools

- Runs immediately prior to snapshot creation

- Triggers a database dump (for example, via

mysqldumpormariadb-dump) - Blocks snapshot creation until the dump completes

Example Freeze Script

The freeze script is custom code, unique to each environment & likely each VM. In my case, I created a backupScripts.d folder in the existing /etc/vmware-tools/ path. In that backupScripts.d folder, I created a shell script named backupTask.sh with the following content:

#!/bin/bash

# Create a variable for todays date

printf -v date '%(%Y-%m-%d)T' -1

echo "Starting backup for ${date}"

if [ "$1" == "freeze" ]

then

# devipam

docker exec devipam-devipam-mariadb-1 mariadb-dump --all-databases -uroot -p"VMware1!" > /tmp/${date}-devipam.sql

gzip /tmp/${date}-devipam.sql

mv /tmp/${date}-devipam.sql.gz /data/_backup/devipam/

fiThis is an early example of the script, I subsequently made changes to improve logging (write to file instead of console) and remove the password from script. I wanted to include this sample as it is the core logic needed, a check for the input parameter of ‘freeze’ and then the commands to run for the backup inside that if statement.

Restore Scenarios

With both VM and database backups available, recovery becomes more flexible:

- Minor data issue – Restore the database dump

- Application failure – Restore application data without touching the OS

- OS-level issue – Restore the VM

- Worst case – Restore the VM and validate data using a known-good dump

This layered approach reduces both recovery time and risk.

Conclusion

Crash-consistent VM backups are an excellent baseline and, for many workloads, entirely sufficient. But once a service becomes important enough that its data matters, relying solely on crash consistency introduces uncertainty.

By adding a simple, application-aware database backup triggered via a VMware freeze script, you can significantly improve recoverability with minimal complexity. The result is a backup strategy that protects not just the VM, but the data that actually matters.