My homelab has a small UPS to help carry through short power ‘blips’ that occur from time to time — it is not sized for prolonged outages. As such, I don’t have any automation to detect/respond to these on battery events. Recently some storms rolled through, causing a power outage long enough that everything in the lab powered down hard. This has happened to me before and I always learn something from the recovery efforts. This post will recap some of the latest lessons learned.

Lesson #1: VMFS locking

My lab has one ESXi host that I like to consider a ‘disaster recovery’ host. I try to limit any sort of external dependencies, it uses standard virtual switches, local disk, etc. and only runs a few VMs, like a secondary domain controller / DNS server, backup target for VCSA backups, and the repository for a backup system. When I’m powering things up, this is the first host that I bring online and I power on these VM guests before any others. However, this time powering on a guest resulted in a “Failed to power on virtual machine dr-control-21. Insufficient resources.” error message. I tried another VM on the same host/storage and received the same error. I tried a few initial troubleshooting steps:

- Reboot host.

- Confirm memory/CPU counts match expected values to rule out resource failure.

- Verified host licensing wasn’t expired.

- SSH to host and manually create/delete files from VMFS datastore.

- Attempt to power on a VM on a different disk on the same host — success.

All of these steps checked out okay, so next I went to the vmkernel.log file and watched it as I tried to power on a VM on this specific SSD. I included a few entries from that log below:

2023-02-28T14:34:49.190Z cpu0:265717)WARNING: SwapExtend: 719: Current swap file size is 0 KB.

2023-02-28T14:34:49.190Z cpu0:265717)WARNING: SwapExtend: vm 265717: 727: Failed to extend swap file. type=regular from 0 KB to 10240 KB. currently 0. status=Busy

2023-02-28T14:45:44.724Z cpu1:264688 opID=44d4b0b6)Fil6: 4094: 'dr-esx-31_ssd': Fil6 file IO (<FD c17 r6>) : Busy

2023-02-28T14:45:44.724Z cpu1:264688 opID=44d4b0b6)Fil6: 4060: ioCtx: 0x454917beddc0, world: 264688, overallStatus: Lost previously held disk lock, token: 0x0, tokenStatus: N, txnToken: 0x0, txnTokenStatus: N, totalIOSize: 3761, maxIOLength: 3761The first couple of lines included above pointed me at a KB article that seemed helpful: Powering on a virtual machine fails with the error: Failed to extend swap file from 0 KB to xxxx KB (2030250) (vmware.com). I tried to create a memory reservation as suggested, but that operation wouldn’t complete.

My next thought was that the physical SSD drive had failed. This is a consumer grade device that has been running 24×7 for years, so failure wouldn’t be a huge surprise. To check this, I found the ID of the disk using esxcli storage core device list. I then checked the physical disk with the following command:

esxcli storage core device smart get -d t10.ATA_____WDC_WDS500G1B0B2D00AS40__________________163763421472________This returned a table that showed no fail or error counts for the disk, suggesting that it was physically healthy. I then remembered a tool that I haven’t used before, vSphere On-disk Metadata Analyzer (voma) described here: https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.storage.doc/GUID-6F991DB5-9AF0-4F9F-809C-B82D3EED7DAF.html. I was at a point where I assumed these VMs were lost and would need rebuilt anyway, so why not give this a try first? I removed the VMs on this disk from inventory and rebooted the host so that everything would be as clean as possible. I then ran voma to check VMFS with the following syntax:

voma -m vmfs -f check -d /vmfs/devices/disks/t10.ATA_____WDC_WDS500G1B0B2D00AS40__________________163763421472________This check did find some errors:

Phase 1: Checking VMFS header and resource files

Detected VMFS-6 file system (labeled:'dr-esx-31_ssd') with UUID:5f80a2f2-d98ebf77-71a5-b8aeed72cacf, Version 6:82

Found stale lock [type 10c00003 offset 153174016 v 10, hb offset 3440640

gen 247, mode 1, owner 612cc20b-2b16ed7f-e19d-b8aeed72cacf mtime 264072

num 0 gblnum 0 gblgen 0 gblbrk 0]

Found stale lock [type 10c00003 offset 153214976 v 8, hb offset 3440640

gen 7, mode 1, owner 5f80a174-7a6f9c7f-4a06-b8aeed72cacf mtime 2375897

num 0 gblnum 0 gblgen 0 gblbrk 0]

Found stale lock [type 10c00003 offset 287481856 v 6, hb offset 3440640

gen 7, mode 1, owner 5f80a174-7a6f9c7f-4a06-b8aeed72cacf mtime 2375696

num 0 gblnum 0 gblgen 0 gblbrk 0]

<redacted to save space>

Phase 3: Checking all file descriptors.

Found stale lock [type 10c00001 offset 71827456 v 5688, hb offset 3440640

gen 609, mode 1, owner 63fdfacc-c6c0eb27-c5e2-b8aeed72cacf mtime 2147

num 0 gblnum 0 gblgen 0 gblbrk 0]

Found stale lock [type 10c00001 offset 80044032 v 2493, hb offset 3440640

gen 239, mode 1, owner 60d0b4b3-52c8ad5d-fce9-b8aeed72cacf mtime 1643

num 0 gblnum 0 gblgen 0 gblbrk 0]

<redacted to save space>

Total Errors Found: 2The stale lock errors did seem to align with the second set of vmkernel logs included above. I went ahead and ran the command in fix mode with the syntax:

voma -m vmfs -f fix -d /vmfs/devices/disks/t10.ATA_____WDC_WDS500G1B0B2D00AS40__________________163763421472________Once complete I re-ran the check. It still showed one error (down from the origional 2), but the stale lock entries were no longer present. I re-registered the VMX files that I had previously removed, and great news — the affected VMs could be powered on!

The lesson here was a reminder to be patient when troubleshooting. Start with the easiest steps first, keep notes on what has been tried, and that logs are your friend. Also, in the real world, avoid having your disaster recovery and production environments share the same power feed.

Lesson #2: Its always DNS

While troubleshooting the VMFS issue above, I decided to power on other pieces of my lab, a Synology NAS and the hosts in my main ESXi cluster. This is different from my normal order of operations, as I normally wait for the ‘disaster recovery’ VMs to come online first. However, I didn’t know how long it would take to resolve that issue, and was thinking a rebuild of the backup domain controller / DNS server was going to be required. Promoting a new/replacement domain controller was going to require that the primary system come online anyway.

I waited an unusually long time for the clustered ESXi hosts to boot. I could ping them, but wasn’t able to reach the web interface to login. After awhile I gave up, powered one system off completely and focused on getting just one online. I power cycled that other system and gave it 10-15 minutes to boot… but still nothing. This host would normally power up in 3-5 minutes, so something else was wrong. I powered off the host again and rearranged gear so I could connect an external monitor. I powered on the system, and to my surprise ESXi was actually booting — I had assumed that there was going to be an error onscreen early in the boot process. I pressed ALT+F1 to watch the boot process. I was distracted for a bit, but when I looked back I finally saw the culprit causing my delay and was able to grab a picture:

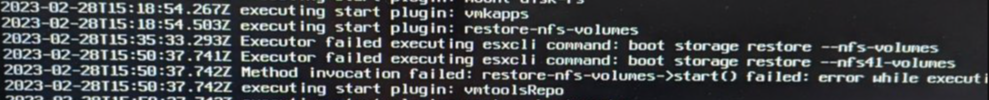

The process to bring my nfs-volumes online had a significant delay, over 30 minutes. I had not encountered this before, and immediately assumed something was wrong with my NAS. I logged in to its UI and everything looked healthy, and I was able to connect to an NFS mount as a test… so the NFS target wasn’t the cause of my problem. Since I finally waited long enough for the ESXi host to boot, I went to the command line and attempted to ping the IP addresses of the NAS, thinking I had a network connectivity / VLAN problem on my ESXi host facing switch ports. The vmkping commands worked without issue. Then it hit me. The VMFS issue described above prevented my disaster recovery DNS server from coming online, so when these ‘production’ hosts came online, they were not able to resolve the _name_ of my NFS server, and my NFS datastores were likely mounted by hostname, not IP address. I went to edit my ESXi hosts /etc/hosts file to test this theory, but noticed a line that said #Do not modify this file directly, please use esxcli. I checked the esxcli syntax and found the command I needed:

esxcli network ip hosts add --hostname=core-stor02.lab.enterpriseadmins.org --ip=192.168.56.21I confirmed the /etc/hosts file included the expected entry after running the command. I then rebooted the ESXi host — it came back online quickly, datastores were present, and VMs appeared. Everything powered on as expected.

Lesson learned. My ‘production’ hosts were actually dependent on name resolution being provided by the disaster recovery environment. I’ve added this host file entry on both of my management hosts (where primary DNS runs) so that I don’t encounter this issue again.

Lesson #3: Documentation is important

I was lucky in that I remembered hostnames/IP addresses used in my lab. If this were a larger environment, with more admins each knowing just their piece of the puzzle, this could have been much harder to recover. Some regular documentation of how things are setup, such as the output from an AsBuiltReport would have been valuable for this environment — especially if that output was store outside of the lab. In my case I had some documentation stored on the filesystem of a VM on my disaster recovery host — and that VM was impacted by the VMFS issue as well. Going forward, I may try to send similar report output to a third party repository every month so that it is stored completely outside of the lab it is documenting.