I recently did some performance comparisons between NFS and iSCSI on a Synology DS723+ NAS. When creating the iSCSI LUNs from within the Synology, there is a ‘Space Allocation’ drop down that has two values: Thick Provisioning (better performance) and Thin Provisioning (flexible storage allocation). When selecting Thin Provisioning, a checkbox appears to enable space reclamation. I’ve done some basic testing at a small scale and observed that both thick & thin devices perform similarly. However, I wanted to dig a bit deeper into the space reclamation functionality.

I suspected this reclamation functionality would map to the VAAI block storage UNMAP primitive, described in detail here: https://core.vmware.com/resource/vmware-vsphere-apis-array-integration-vaai#sec9410-sub6. This core.vmware.com article includes sample commands that you can use from the ESXi host perspective to verify thin provisioning status (esxcli storage core device list -d naa.624a9370d4d78052ea564a7e00011030) and if delete is supported (esxcli storage core device vaai status get -d naa.624a9370d4d78052ea564a7e00011030). Running these commands worked as expected. All thin provisioned LUNs would show Thin Provisioning Status: yes using the first command, and only if the ‘space reclamation’ box was selected would the second command return Delete Status: supported.

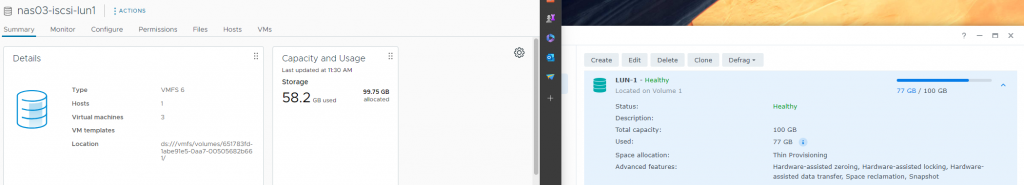

As expected, deleting a VM from an iSCSI datastore would be immediately reflected in the usage of the datastore from the ESXi perspective, but not from the NAS perspective. Waiting for automatic VMFS UNMAP would eventually cause the NAS perspective to catch up. To see immediate results, I was able to run esxcli storage vmfs unmap -l nas03-iscsi-lun1 from the ESXi Host. As you can see in the image below, after deleting a VM from the datastore, vSphere was showing 58.2GB used, while the same LUN on the NAS was showing 77GB used.

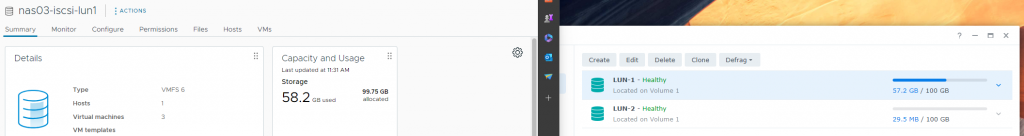

Running the esxcli storage vmfs unmap -l nas03-iscsi-lun1 command immediately caused the Synology side of the view to update to very similar numbers:

This automatic UNMAP is not new, but was interesting to see in practice. For the last several years I’ve been using NFS datastores in my lab, where deleting a file from the datastore deletes the same file from the NAS filesystem directly, so I haven’t looked closely at the functionality. A more thorough article describing the capabilities and settings can be found here: https://www.codyhosterman.com/2017/08/monitoring-automatic-vmfs-6-unmap-in-esxi/. The article is several years old, but I tested many of the commands using an 8.0u1 ESXi host and had very similar output.